Selfhosted

Hello everyone! Mods here 😊 Tell us, what services do you selfhost? Extra points for selfhosted hardware infrastructure. Feel free to take it as a chance to present yourself to the community! 🦎

forum.syncthing.net

forum.syncthing.net

Announcement by the creator: https://forum.syncthing.net/t/discontinuing-syncthing-android/23002 > Unfortunately I don’t have good news on the state of the android app: I am retiring it. The last release on Github and F-Droid will happen with the December 2024 Syncthing version. > Reason is a combination of Google making Play publishing something between hard and impossible and no active maintenance. The app saw no significant development for a long time and without Play releases I do no longer see enough benefit and/or have enough motivation to keep up the ongoing maintenance an app requires even without doing much, if any, changes. > Thanks a lot to everyone who ever contributed to this app!

Hey everyone, it’s me again, one of the two developers behind *[GameVault](https://gamevau.lt)*, a self-hosted gaming platform similar to how Plex/Jellyfin is for your movies and series, but for your game collection. If you've hoarded a bunch of games over the years, this app is going to be your best friend. Think of it as your own personal Steam, hosted on your own server. If you haven’t heard of *GameVault* yet, you can check it out [here](https://gamevau.lt) and get started within 5 minutes—seriously, it’s a game changer. For those who already know *GameVault*, or its old name *He-Who-Must-Not-Be-Named*, **we are excited to tell you we just launched a major update**. I’m talking a massive overhaul—so much so, that we could’ve rebuilt the whole thing from scratch. Here’s the big news: **We’re no longer relying on RAWG or Google Images for game metadata.** Instead, we’ve officially partnered with **IGDB/Twitch** for a more reliable and extended metadata experience! But it doesn’t stop there. We’ve also rolled out a new **plugin system** and a **metadata framework** that allows you to connect to multiple metadata providers at once. It’s never been this cool to run your own Steam-like platform right from your good ol' 19" incher below your desk! **What’s new in this update?** * **IGDB/Twitch Integration**: Say goodbye to unreliable metadata scrapers. Now you can enjoy game info sourced directly from IGDB. * **Customizable Metadata**: Edit and fine-tune game metadata with ease. Your changes are saved separately, so the original data stays intact. * **Plugin System**: Build your own plugins for metadata or connect to as many sources as you want—unlimited flexibility! * **Parental Controls**: Manage age-appropriate access for the family and children. * **Built-in Media Player**: Watch game trailers and gameplay videos directly in GameVault. * **UI Overhaul**: A fresh, streamlined look for the app, community, game and admin interface. * **Halloween Theme**: For GameVault+ users, we’ve added a spooky Halloween skin just in time for the season! **Things to keep in mind when updating:** * *GameVault Client v1.12* is now required for servers running *v13* or above. * Older clients won’t work on servers that have been updated to *v13*. For a smooth update and a guide on how to use all these new features, check out the detailed [migration instructions](https://github.com/Phalcode/gamevault-backend/blob/master/CHANGELOG.md#1300) in the server changelogs. As always, if you hit any snags, feel free to reach out to us on [Discord](https://discord.gg/NEdNen2dSu). If you run into any issues or need help with the migration, feel free to join and open a ticket in our [Discord](https://discord.gg/NEdNen2dSu) community—we’re always happy to help! If you want to support our pet-project and keep most upcoming features of *GameVault* free for everyone, consider [subscribing to **GameVault+**](https://gamevau.lt/gamevault-plus) or making [a one-time donation](https://phalco.de/support-us). Every little bit fuels our passion to keep building and improving! Thanks for everything! We're more than 800 Members on our discord now and I can’t wait to hear what you think of the latest version.

Bitwarden introduced a non-free dependency to their clients. The Bitwarden CTO tried to frame this as a bug but his explanation does not really make it any less concerning. Perhaps it is time for alternative Bitwarden-compatible clients. An open source client that's not based on Electron would be nice. Or move to something else entirely? Are there any other client-server open source password managers?

A decade ago I used BitTorrent Sync. Then it became Resilio Sync. Then with Resilio Sync 2 they nerfed the free app to a point that I just removed that from all my computers and switched to syncthing. Yesterday I was watching my server struggling when syncthing was doing the periodic scan of a directory with hundreds of thousands of files and then i thought, "maybe Resilio Sync uses less resources or doesn't waste time reindexing a static directory for the nth time" I went to see their website and now with the new version 3, all the features are back. The business plan now is to sell the app to enterprises at unaffordable prices rather to persuade consumers to pay a subscription to self host their syncing server I wanted to try it but now they say docker version is discontinued, need to install the package to bare metal. Ugh... So I desisted and decided to stay with syncthing Now with the news of the impending discontinuation of syncthing android app, everything changes. Without Android support, syncthing is no more irreplaceable for me. So, has anyone tried Resilio Sync 3? Is it good?

Any recommendation for a cheap, small [#firewall](https://social.graves.cl/tags/firewall) for my [#homelab](https://social.graves.cl/tags/homelab) ? I realized I can’t control easily what goes out of my network only via DNS block lists Cc [@selfhosted@lemmy.world](https://lemmy.world/c/selfhosted) [@selfhost@lemmy.ml](https://lemmy.ml/c/selfhost)

paravoce.bearblog.dev

paravoce.bearblog.dev

cross-posted from: https://lemmy.world/post/21065836 > Hi friends, as promised, I'm back with my second post. I'll be hanging around in the comments for any questions! > > In this post, I take a look at a typical deployment process, how long each part of it takes, and then I present a simple alternative that I use which is much faster and perfect for hobbit software.

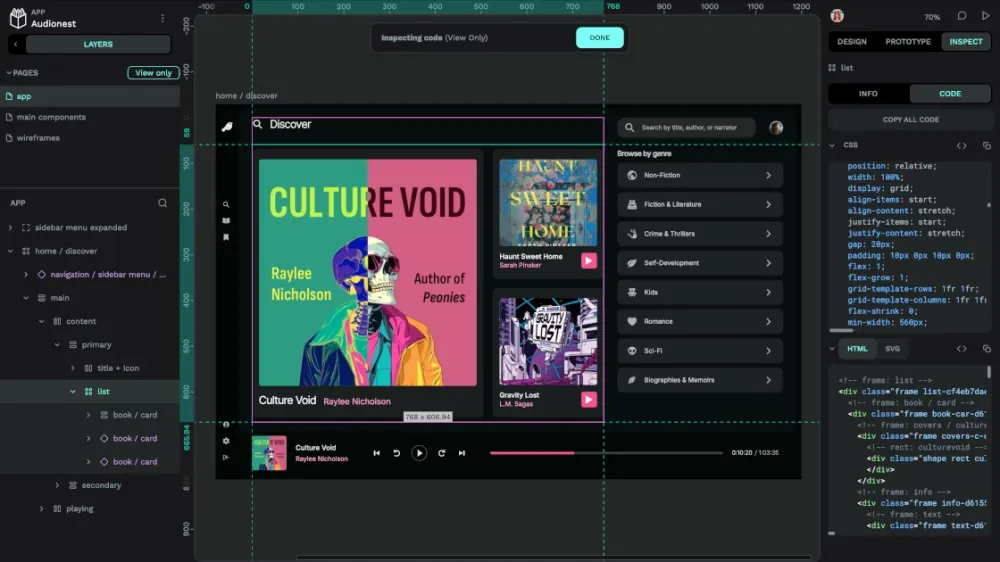

Designers can create stunning designs, interactive prototypes, and design systems at scale, while developers enjoy ready-to-use code and make their workflow easy and fast. **Designed for developers** Penpot was built to serve both designers and developers and create a fluid design-code process. You have the choice to enjoy real-time collaboration or play "solo". **Inspect mode** Work with ready-to-use code and make your workflow easy and fast. The inspect tab gives instant access to SVG, CSS and HTML code. **Self host your own instance** Provide your team or organization with a completely owned collaborative design tool. Use Penpot's cloud service or deploy your own Penpot server. **Integrations** Penpot offers integration into the development toolchain, thanks to its support for webhooks and an API accessible through access tokens.  Self hosting instructions (via docker) can be found here: [https://help.penpot.app/technical-guide/getting-started/#install-with-docker](https://help.penpot.app/technical-guide/getting-started/#install-with-docker)

Hi! I am trying to set up a wireguard client in docker. I use the linuxserver image, I it running in server mode on a different machine (exactly the same ubuntu version) and i can login with my laptop to the wireguard server, but the docker wg-client has problems, i hope someone has an idea :) The client docker container has trouble starting and throws this error: `[___](modprobe: FATAL: Module ip6_tables not found in directory /lib/modules/6.8.0-47-generic ip6tables-restore v1.8.10 (legacy): ip6tables-restore: unable to initialize table 'raw' Error occurred at line: 1 Try 'ip6tables-restore -h' or 'ip6tables-restore --help' for more information. )` I copied the config to the server with the wg server running, it has the same problem with the client. I can ping google.com from inside the server container, but not from inside the client container. Here is the output of the 'route' cmd from the client:` Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 172.18.0.0 * 255.255.0.0 U 0 0 0 eth0 ` I searched for a solution quite a bit, but cant seem to find something that works. changed the .yml compose file according to some suggestions but without success. I tried to install the missing module but could not get it working. Its a completely clean install of ubuntu 24.04.1 LTS, Kernel: Linux 6.8.0-47-generic. here is the compose file, in case its needed, it should be exact same one as provided by linux-server in their github: ::: spoiler compose file: ``` services: wireguard: image: lscr.io/linuxserver/wireguard:latest container_name: wireguard-client cap_add: - NET_ADMIN - SYS_MODULE #optional environment: - PUID=1000 - PGID=1000 - TZ=Europe/Berlin # - SERVERURL=wireguard.domain.com #optional # - SERVERPORT=51820 #optional # - PEERS=1 #optional # - PEERDNS=auto #optional # - INTERNAL_SUBNET=10.13.13.0 #optional # - ALLOWEDIPS=0.0.0.0/0 #optional # - PERSISTENTKEEPALIVE_PEERS= #optional # - LOG_CONFS=true #optional volumes: - /srv/wireguard/config:/config # - /lib/modules:/lib/modules #optional ports: - 51820:51820/udp sysctls: - net.ipv4.conf.all.src_valid_mark=1 restart: unless-stopped ``` ::: here is the complete error log from the wg-client docker: ::: spoiler error ``` [migrations] started [migrations] no migrations found usermod: no changes ─────────────────────────────────────── ██╗ ███████╗██╗ ██████╗ ██║ ██╔════╝██║██╔═══██╗ ██║ ███████╗██║██║ ██║ ██║ ╚════██║██║██║ ██║ ███████╗███████║██║╚██████╔╝ ╚══════╝╚══════╝╚═╝ ╚═════╝ Brought to you by linuxserver.io ─────────────────────────────────────── To support the app dev(s) visit: WireGuard: https://www.wireguard.com/donations/ To support LSIO projects visit: https://www.linuxserver.io/donate/ ─────────────────────────────────────── GID/UID ─────────────────────────────────────── User UID: 1000 User GID: 1000 ─────────────────────────────────────── Linuxserver.io version: 1.0.20210914-r4-ls55 Build-date: 2024-10-10T11:23:38+00:00 ─────────────────────────────────────── Uname info: Linux ec3813b50277 6.8.0-47-generic #47-Ubuntu SMP PREEMPT_DYNAMIC Fri Sep 27 21:40:26 UTC 2024 x86_64 GNU/Linux **** It seems the wireguard module is already active. Skipping kernel header install and module compilation. **** **** Client mode selected. **** [custom-init] No custom files found, skipping... **** Disabling CoreDNS **** **** Found WG conf /config/wg_confs/peer1.conf, adding to list **** **** Activating tunnel /config/wg_confs/peer1.conf **** [#] ip link add peer1 type wireguard [#] wg setconf peer1 /dev/fd/63 [#] ip -4 address add 10.13.13.2 dev peer1 [#] ip link set mtu 1420 up dev peer1 [#] resolvconf -a peer1 -m 0 -x s6-rc: fatal: unable to take locks: Resource busy [#] wg set peer1 fwmark 51820 [#] ip -6 route add ::/0 dev peer1 table 51820 [#] ip -6 rule add not fwmark 51820 table 51820 [#] ip -6 rule add table main suppress_prefixlength 0 [#] ip6tables-restore -n modprobe: FATAL: Module ip6_tables not found in directory /lib/modules/6.8.0-47-generic ip6tables-restore v1.8.10 (legacy): ip6tables-restore: unable to initialize table 'raw' Error occurred at line: 1 Try `ip6tables-restore -h' or 'ip6tables-restore --help' for more information. [#] resolvconf -d peer1 -f s6-rc: fatal: unable to take locks: Resource busy [#] ip -6 rule delete table 51820 [#] ip -6 rule delete table main suppress_prefixlength 0 [#] ip link delete dev peer1 **** Tunnel /config/wg_confs/peer1.conf failed, will stop all others! **** **** All tunnels are now down. Please fix the tunnel config /config/wg_confs/peer1.conf and restart the container **** [ls.io-init] done. ``` ::: Thanks a lot. I appreciate every input!

paravoce.bearblog.dev

paravoce.bearblog.dev

cross-posted from: https://lemmy.world/post/21023181 > Sharing some lessons I learned from 10 years/millions of users in production. I’ll be in the comments if anyone has any questions! I hope this series will be useful to the self-hosted and small web crowds—tips for tools to pick and the basics of server management.

Hello everyone! 🎉 I’ve created an RSS Feed Bot that automates sharing news in Lemmy and Fediverse channels, helping to keep Fediverse users better informed. The bot is written in Python3 and can easily run via Docker Compose. Hope you find it useful! 🚀 #Lemmy #Fediverse #RSS #Python #Docker #Automation #OpenSource

My RTX 2060 computer running DarkPlanet 8B: Me: What is isotony? Bot: Isotony, a term coined by the mathematician and physicist Robert Hermann in the 1970s, refers to the property of a Lie algebra that ensures the commutator of any two elements is also an element of the algebra. In other words, isotony (or the isotonicity of the Lie bracket) means that the Lie algebra is closed under the commutator operation. Formally, a Lie algebra G over a field k is said to be isotonic if for any u, v ∈ G, [u, v] ∈ G, where [ , ] denotes the Lie bracket (or commutator). This implies that the Lie algebra is a subset of itself under the commutator operation, making it a Lie subalgebra of itself. Isotony has significant consequences in the theory of Lie algebras and their representations: Cohomology: Isotony is a crucial condition for the development of Lie algebra cohomology, which studies the obstructions to extending Lie algebra homomorphisms. It ensures that the cohomology groups are well-defined. Representations: The isotony property is essential for the classification of finite-dimensional representations of semisimple Lie algebras. It guarantees that the representation space remains unchanged under the commutator operation, facilitating the construction of higher-order tensors and invariant polynomials. Geometry: In the context of differential geometry, isotonic Lie algebras are linked to the structure of geometric objects, such as tangent spaces and cotangent spaces. This relationship is exploited in the study of Lie groups, symplectic manifolds, and Poisson geometry. Physics: In quantum mechanics and gauge theories, isotony is relevant to the definition of observables and their algebraic properties under commutation. The isotonicity of the Lie algebra of observables ensures that the commutator of any two observables remains an observable, which is vital for the consistency of the theory. It's worth noting that not all Lie algebras are isotonic. For instance, the algebra of polynomial vector fields on ℝ² is not isotonic, since the commutator of two such fields may not be a polynomial vector field. In summary, isotony is a fundamental property of Lie algebras that ensures their closure under the commutator operation. It has far-reaching implications for the classification of Lie algebras, their representations, cohomology, and connections to differential geometry and physics. Do you have any more questions about isotony or its applications? Gemini: Me: What is isotony? Bot: "Isotonic" has different meanings depending on the context. Here are the most common ones: 1. In Chemistry/Biology: * Isotonic solutions: Refer to solutions that have the same osmotic pressure. This means they have the same concentration of solutes. Cells placed in an isotonic solution will neither shrink nor swell because there's no net movement of water across the cell membrane. This is important in biology and medicine, especially when administering fluids intravenously. 2. In Exercise Physiology: * Isotonic muscle contractions: Describe muscle contractions where the muscle changes length while maintaining a constant tension. There are two types: * Concentric contractions: The muscle shortens as it contracts (e.g., lifting a weight). * Eccentric contractions: The muscle lengthens as it contracts (e.g., lowering a weight). 3. Other less common meanings: * In music: Refers to equal tones or intervals. * In mathematics: Can sometimes refer to functions that preserve order (though "order-preserving" or "monotone" are more common terms in this context). To understand the specific meaning of "isotonic," it's essential to consider the context in which it's used. Here are some examples to illustrate the different meanings: * "The doctor administered an isotonic saline solution to the patient." (Chemistry/Biology) * "Lifting a dumbbell is an example of an isotonic concentric contraction." (Exercise Physiology) * "The two notes played an isotonic interval." (Music) If you can provide more context about where you encountered the term "isotonic," I can help you pinpoint its specific meaning. ------ **Both were given a mathematical space context before asking the question.**

Evening Lemmy, I have run into a small hiccup in my self-hosting journey. Youtube on my TV in the living room has ads... and they become more unbearable by the day. To that end, I'd like to set up a Raspberry Pi (Or something) to run as a one-stop for media. Ideally, I'd like it to have YouTube (Or more likely NewPipe/FreeTube), Steam Link and access to my Jellyfin instance. More ideally, I'd like this to be controllable with a controller (TV Remote, Steam controller, doesn't matter). The reason for the latter is that I'd rather not create too much trouble for my wife when she uses the TV. I've done some looking, and I seem to be able to get an Amazon Firestick to run NewPipe, and Jellyfin, and maybe even the Steam Link but from the stories I've read it's... less than ideal. So, I was hoping there may be an alternative. The goal is to get all three in one system, with decently user friendly functionality. Has anyone set something similar up, and could you point me in a direction.

I would like to be able to give people an URL and have them be able to access my service. Example I have overseerr currently funneled so when the go to url pcname.tail$$$$$.ts.net they are greeted with overseerr. But if I funneled another service at pcname.tail$$$$$.ts.net/immich for example it does nothing just blank loading but the url knows it should be Immich or another service (I can put the url pcname.tail$$$$$.ts.net/immich in the immich app and have it work). I have tried reversing them so I believe I can not set paths after `.net.`. Am I doing something wrong or is this not supported?

Is there a way to remotely (wired/wireless) update photos in a digital picture frame that doesn't have any connectivity capability? All it has is a USB port and sd card port.

I have a small homelab that is not open to the internet. I am considering the following setup. Please let me know if there are any glaring issues or if I am over complicating things. * I want to setup a reverse proxy in the cloud that will also act as a certificate authority. (I want to limit who can access the server to a small group of people.) * I will setup a vpn from a raspberry pi in my home to the reverse proxy in the cloud. * The traffic will pass from the raspberry pi vpn to my homelab. I am not sure if I need the raspberry pi. I like the cloud as the reverse proxy as I do not have a static IP. I would just get a cheap vps from hetzner or something like that.

I have Grafana and Influxdb setup but it is fairly complex for what I am doing. I don't want to spend a bunch of time creating dashboards and thinking about the movement of data. I am looking for something simple. I am looking to mostly monitor uptime and Ansible automations. Edit: Found this: gethomepage.dev

Hey guys, version 2.5.3 of [Tasks.md](https://github.com/BaldissaraMatheus/Tasks.md) just got released! This release is actually pretty small, as I focused a lot on resolving technical debt, fixing visual inconsistencies and improving "under the hood" stuff. Which I will continue to do a little bit more before the next release. For those who don't know, [Tasks.md](https://github.com/BaldissaraMatheus/Tasks.md) is a self-hosted, Markdown file based task management board. It's like a kanban board that uses your filesystem as a database, so you can manipulate all cards within the app or change them directly through a text editor, changing them in one place will reflect on the other one. The latest release includes the following: - Feature: Generate an initial color for a new tags based on their names - Feature: Add new tag name input validation - Fix: Use environment variables in Dockerfile ENTRYPOINT - Fix: Allow dragging cards when sort is applied - Fix: Fix many visual issues Edit: Updated with the correct link, sorry for the confusion! The fact that someone created another application with the same name I used for the one I made is really annoying

Hi guys! I'm looking to monitor/control the power consumption of some old window-hanging aircon units, that don't really mind when the power is literally cut from the wall. I'd like to be able to see how much power they consume, and also being able to turn them on and off at the socket (the IR doesn't work all that well to begin with). I was checking about the Tapo P110M, but seems these are not sharing the power consumption offline, you need to register them in the app and they only do it through a Tapo account. What alternatives do I have? Important, I guess: As I live off an ex-UK colony here, we do have UK-like three pronged sockets, that's the form factor (Type G, I think?) I'd be needing.

I need to record information about what my cat eats and does, as she might have a food allergy and I need to track down what it is. So I am after some kind of a user friendly locally hosted database (maybe via some kind of app), preferably Linux friendly. It would be nice if it had similar relationships to the added image, some kind of relational DB that I can fill with data. But essentially I need to have a bunch of lookup tables to return some data specific to difference events. Its a bit of a pain (and takes time) to have to write an entire webapp to manage all this from scratch, that's why I am looking for some kind of user friendly GUI way to do it. Surely there must be some kind of relational database managing "application" that lets you set up some lookup tables and enter data in a nice and easy GUI way to do it? `sqllitebrowser` doesn't count as it doesn't handle linked tables in a nice way (would be nice if its friendly for my wife to use) :) Cheers!

Hi people. I am running pihole under podman and its dedicated system account on my NAS. Now, from the NAS, I get a connection refused on `ip.of.the.nas:53` but everywhere else in my network, pihole works perfectly. To run pihole as a rootless container, i made it listen on 1053 and I have a firewall redirection from 53 to 1053 for both udp and tcp. Any pointer to where (and how) I can debug this ? Edit: Small precision about my current setup : ISP router (so I can't really do anything on it) and NAS running opensuse leap

This app has really made my life better so I thought I'd share it. It's a bookmark everything app like raindrop.io or Pocket; except it is self-hosted. It has Firefox & Chrome extensions as well as iOS and Android mobile apps (so it's available pretty much everywhere). You have the option to use AI for auto-tagging or you can not use the feature if AI bothers you. AI can either be your locally hosted LLM or you can use the ChatGPT API. I use it with a locally hosted LLM. I'm not the developer, just a happy user. https://hoarder.app/

Hi there, I’m thinking about what kind of opportunities there is for a portable media center you can have with you in the car, train or whatever. I imagine that the media center would create its own WiFi, so that devices would be able to connect to it and access the media. I know you could do something with a Raspberry Pi, but how could this work in practice? What would be an easy way to access the media from an iPad fx? What software could be used? As a bonus, it would be pretty cool if the media center could connect to a hotel WiFi and then create a hotspot from that. Edit: This would be used when on the move. So you would have the media with you on the media center.

I see a lot of talk of Ollama here, which I personally don't like because: - The quantizations they use tend to be suboptimal - It abstracts away llama.cpp in a way that, frankly, leaves a lot of performance and quality on the table. - It abstracts away things that you should *really* know for hosting LLMs. - I don't like some things about the devs. I won't rant, but I *especially* don't like the hint they're cooking up something commercial. So, here's a quick guide to get away from Ollama. - First step is to pick your OS. Windows is fine, but if setting up something new, linux is best. I favor CachyOS in particular, for its great python performance. If you use Windows, be sure to enable hardware accelerated scheduling and [disable shared memory.](https://support.cognex.com/docs/deep-learning_330/web/EN/deep-learning/Content/deep-learning-Topics/optimization/gpu-disable-shared.htm?TocPath=Optimization%20Guidelines%7CNVIDIA%C2%AE%20GPU%20Guidelines%7C_____6) - Ensure the latest version of CUDA (or ROCm, if using AMD) is installed. Linux is great for this, as many distros package them for you. - Install Python 3.11.x, 3.12.x, or at least whatever your distro supports, and git. If on linux, also install your distro's "build tools" package. Now for *actually* installing the runtime. There are a great number of inference engines supporting different quantizations, forgive the Reddit link but see: https://old.reddit.com/r/LocalLLaMA/comments/1fg3jgr/a_large_table_of_inference_engines_and_supported/ As far as I am concerned, 3 matter to "home" hosters on consumer GPUs: - Exllama (and by extension TabbyAPI), as a very fast, very memory efficient "GPU only" runtime, supports AMD via ROCM and Nvidia via CUDA: https://github.com/theroyallab/tabbyAPI - Aphrodite Engine. While not strictly as vram efficient, its much faster with parallel API calls, reasonably efficient at very short context, and supports just about every quantization under the sun and more exotic models than exllama. AMD/Nvidia only: https://github.com/PygmalionAI/Aphrodite-engine - This fork of kobold.cpp, which supports more fine grained kv cache quantization (we will get to that). It supports CPU offloading and *I think* Apple Metal: https://github.com/Nexesenex/croco.cpp Now, there are also reasons I don't like llama.cpp, but one of the big ones is that sometimes its model implementations have... quality degrading issues, or odd bugs. Hence I would generally recommend TabbyAPI if you have enough vram to avoid offloading to CPU, and can figure out how to set it up. So: - Open a terminal, run `git clone https://github.com/theroyallab/tabbyAPI.git` - `cd tabbyAPI` - Follow this guide for setting up a python venv and installing pytorch and tabbyAPI: https://github.com/theroyallab/tabbyAPI/wiki/01.-Getting-Started#installing This can go wrong, if anyone gets stuck I can help with that. - *Next*, figure out how much VRAM you have. - Figure out how much "context" you want, aka how much text the llm can ingest. If a models has a context length of, say, "8K" that means it can support 8K tokens as input, or less than 8K words. Not all tokenizers are the same, some like Qwen 2.5's can fit nearly a word per token, while others are more in the ballpark of half a work per token or less. - Keep in mind that the actual context length of many models is an outright lie, see: https://github.com/hsiehjackson/RULER - Exllama has a feature called "kv cache quantization" that can dramatically shrink the VRAM the "context" of an LLM takes up. Unlike llama.cpp, it's Q4 cache is basically lossless, and on a model like Command-R, an 80K+ context can take up less than 4GB! Its essential to enable Q4 or Q6 cache to squeeze in as much LLM as you can into your GPU. - With that in mind, you can search huggingface for your desired model. Since we are using tabbyAPI, we want to search for "exl2" quantizations: https://huggingface.co/models?sort=modified&search=exl2 - There are all sorts of finetunes... and a lot of straight-up garbage. But I will post some general recommendations based on total vram: - 4GB: A very small quantization of Qwen 2.5 7B. Or maybe Llama 3B. - 6GB: IMO llama 3.1 8B is best here. There are many finetunes of this depending on what you want (horny chat, tool usage, math, whatever). For coding, I would recommend Qwen 7B coder instead: https://huggingface.co/models?sort=trending&search=qwen+7b+exl2 - 8GB-12GB Qwen 2.5 14B is king! Unlike it's 7B counterpart, I find the 14B version of the model incredible for its size, and it will squeeze into this vram pool (albeit with very short context/tight quantization for the 8GB cards). I would recommend trying Arcee's new distillation in particular: https://huggingface.co/bartowski/SuperNova-Medius-exl2 - 16GB: Mistral 22B, Mistral Coder 22B, and *very tight* quantizations of Qwen 2.5 34B are possible. Honorable mention goes to InternLM 2.5 20B, which is alright even at 128K context. - 20GB-24GB: [Command-R 2024 35B](https://huggingface.co/async0x42/c4ai-command-r-08-2024-exl2_4bpw) is excellent for "in context" work, like asking questions about long documents, continuing long stories, anything involving working "with" the text you feed to an LLM rather than pulling from it's internal knowledge pool. It's also quite goot at longer contexts, out to 64K-80K more-or-less, all of which fits in 24GB. Otherwise, stick to Qwen 2.5 34B, which still has a very respectable 32K native context, and a rather mediocre 64K "extended" context via YaRN: https://huggingface.co/DrNicefellow/Qwen2.5-32B-Instruct-4.25bpw-exl2 - 32GB, same as 24GB, just with a higher bpw quantization. But this is also the threshold were lower bpw quantizations of Qwen 2.5 72B (at short context) start to make sense. - 48GB: Llama 3.1 70B (for longer context) or Qwen 2.5 72B (for 32K context or less) Again, browse huggingface and pick an exl2 quantization that will cleanly fill your vram pool + the amount of context you want to specify in TabbyAPI. Many quantizers such as bartowski will list how much space they take up, but you can also just look at the available filesize. - Now... you have to download the model. Bartowski has instructions [here](https://huggingface.co/bartowski/SuperNova-Medius-exl2#download-instructions), but I prefer to use this nifty standalone tool instead: https://github.com/bodaay/HuggingFaceModelDownloader - Put it in your TabbyAPI models folder, and follow the documentation on the wiki. - There are a lot of options. Some to keep in mind are chunk_size (higher than 2048 will process long contexts faster but take up lots of vram, less will save a little vram), cache_mode (use Q4 for long context, Q6/Q8 for short context if you have room), max_seq_len (this is your context length), tensor_parallel (for faster inference with 2 identical GPUs), and max_batch_size (parallel processing if you have multiple user hitting the tabbyAPI server, but more vram usage) - Now... pick your frontend. The tabbyAPI wiki has a good compliation of community projects, but Open Web UI is very popular right now: https://github.com/open-webui/open-webui I personally use exui: https://github.com/turboderp/exui - And *be careful with your sampling settings when using LLMs*. Different models behave differently, but one of the most common mistakes people make is using "old" sampling parameters for new models. In general, keep temperature very low (<0.1, or even zero) and rep penalty low (1.01?) unless you need long, creative responses. If available in your UI, enable DRY sampling to tamp down repition without "dumbing down" the model with too much temperature or repitition penalty. Always use a MinP of 0.05 or higher and disable other samplers. This is especially important for Chinese models like Qwen, as MinP cuts out "wrong language" answers from the response. - Now, once this is all setup and running, I'd recommend throttling your GPU, as it simply doesn't need its full core speed to maximize its inference speed while generating. For my 3090, I use something like `sudo nvidia-smi -pl 290`, which throttles it down from 420W to 290W. Sorry for the wall of text! I can keep going, discussing kobold.cpp/llama.cpp, Aphrodite, exotic quantization and other niches like that if anyone is interested.

Am using Calibre and audiobookshelf. I'd love a solution where I can search the actual contents of the books. Like being able to search for topics inside all of my books. Would be a cool AI feature - similar to how immich works. Does anyone have a solution for that?

Instructions here: [https://github.com/ghobs91/Self-GPT](https://github.com/ghobs91/Self-GPT) If you’ve ever wanted a ChatGPT-style assistant but fully self-hosted and open source, [Self-GPT](https://github.com/ghobs91/Self-GPT) is a handy script that bundles [Open WebUI](https://github.com/open-webui/open-webui) (chat interface front end) with [Ollama](https://github.com/ollama/ollama) (LLM backend). * **Privacy & Control**: Unlike ChatGPT, everything runs locally, so your data stays with you—great for those concerned about data privacy. * **Cost**: Once set up, self-hosting avoids monthly subscription fees. You’ll need decent hardware (ideally a GPU), but there’s a range of model sizes to fit different setups. * **Flexibility**: Open WebUI and Ollama support multiple models and let you switch between them easily, so you’re not locked into one provider.

Hello, Long time lurker, first time poster and eternal newbie in selfhosting. I have installed cloudflare tunnel in order to allow my Emby installation to be reached externally. (Previously was using tailscale but now trying this solution to expand my 'reach' and include my parents houshold) The tunnel with email OTP works like a charm, but the access seems to be browser specific, so the Emby app doesn't seem to be able to connect (as it faces the email OTP challenge I suppose) Is there a way to combine both? I actually went down the path of writing a little script that tries to authorize the IP of someone that managed to pass the OTP challenge via browser. ( I get the user's IP and update the cloudflare policy via its API) Seems to be overkill, any suggestions? Thx

podcast.james.network

podcast.james.network

I’ve setup my own federated podcast through Castopod, but unsure of how to federate it with Lemmy directly. It is project-focused around FOSS tooling and just enjoying life. Any suggestions on better integrating it with Lemmy? Thanks all. Posted to Technology community as well.

I've been fighting with some issues on my unraid server recently and I'm at a point where I need a graphics card to see the actual video out as the machine boots. The psu in the chassis has no additional connectors that I could use for additional power to a graphics card. Can someone point me in the direction of a super cheap graphics card that will be used for console only and not 3d graphics or gaming.

Update: **I solved my problem.** I got everything working by using [this repo](https://github.com/porelli/firefox-sync), but also by not using LibreWolf - looks like either I'm missing something about its setup, or syncstorage-rs (firefox sync) doesn't handle it well: I noticed that when using it I would get `"ua.os.ver":"UNKNOWN"` in the logs, so maybe it's related. I'm trying to host my Firefox Sync server. I got it running using docker and with instructions from [this github repo](https://github.com/jeena/fxsync-docker). Everything looks fine, I think... I can reach the host and I can reach the `__heartbeat__` endpoint, getting this response: `{"version":"0.13.6","quota":{"enabled":false,"size":0},"database":"Ok","status":"Ok"}`, but nothing seems to sync! I set it up first on my LibreWolf instance and checking the docker container logs look like this: ``` Oct 12 10:43:42.840 INFO Starting 1 workers Oct 12 10:43:42.844 INFO Starting "actix-web-service-0.0.0.0:8000" service on 0.0.0.0:8000 Oct 12 10:43:42.844 INFO Server running on http://0.0.0.0:8000 (mysql) No quota Oct 12 10:43:59.438 INFO {"ua.os.ver":"NT 10.0","ua.name":"Firefox","ua.browser.family":"Firefox","uri.method":"GET","ua.os.family":"Windows","uri.path":"/__heartbeat__","ua.browser.ver":"130.0","ua":"130.0"} Oct 12 10:43:59.706 INFO {"ua.os.ver":"NT 10.0","ua":"130.0","ua.browser.ver":"130.0","ua.os.family":"Windows","uri.path":"/favicon.ico","ua.name":"Firefox","ua.browser.family":"Firefox","uri.method":"GET"} Oct 12 10:44:11.178 INFO {"ua.browser.family":"Firefox","ua.browser.ver":"130.0","uri.method":"GET","uri.path":"/1.0/sync/1.5","ua.os.family":"Linux","token_type":"OAuth","ua.os.ver":"UNKNOWN","ua.name":"Firefox","ua":"130.0"} Oct 12 10:44:11.540 INFO {"ua.name":"HTTP Library","ua.os.family":"Other","ua.browser.family":"Other","ua":"curl","uri.path":"/__heartbeat__","uri.method":"GET","ua.browser.ver":"curl","ua.os.ver":"UNKNOWN"} Oct 12 10:44:11.756 INFO {"ua.os.ver":"UNKNOWN","uri.path":"/1.0/sync/1.5","ua":"130.0","uri.method":"GET","token_type":"OAuth","ua.browser.ver":"130.0","ua.browser.family":"Firefox","ua.name":"Firefox","first_seen_at":"1728729851747","metrics_uid":"fcdfa197568a554e5f5b0a2d05d7b674","ua.os.family":"Linux","uid":"fcdfa197568a554e5f5b0a2d05d7b67452c597ab6caf7770a423378f86d1a4c0"} ``` I set my sync settings to have add-ons, bookmarks and history synced. I installed some add-ons, saved some bookmarks and tried to sync with a new browser profile, then with Firefox on Fedora and Mull on Android, but nothing seems to be moving. Any idea what more to do to troubleshoot this?

I previously asked here [about moving to ZFS](https://lemmy.fwgx.uk/post/266430). So a week on I'm here with an update. TL;DR: Surprisingly simple upgrade. I decided to buy another HBA that came pre-flashed in IT mode and without an onboard BIOS (so that server bootups would be quicker - I'm not using the HBA attached disks as boot disks). For £30 it seems worth the cost to avoid the hassle of flashing it, plus if it all goes wrong I can revert back. I read a whole load about Proxmox PCIE passthrough, most of it out of date it would seem. I am running an AMD system and there are many sugestions online to set grub parameters to `amd_iommu=on`, which when you read in to the kernel parameters for the 6.x version proxmox uses, isn't a valid value. I think I also read that there's no need to set `iommu=pt` on AMD systems. But it's all very confusing as most wikis that should know better are very Intel specific. I eventually saw a youtube video of someone running proxmox 8 on AMD wanting to do the same as I was and they showed that if IOMMU isn't setup, then you get a warning in the web GUI when adding a device. Well that's interesting - I don't get that warning. I am also lucky that the old HBA is in its own IOMMU group, so it should pass through easy without breaking anything. I hope the new one will be the same. Worth noting that there are a lot of bad Youtube videos with people giving bad advise on how to configure a VM for ZFS/TrueNAS use - you need them passed through properly so the VM's OS has full control of them. Which is why an IT HBA is required over an IR one, but just that alone doesn't mean you can't set the config up wrong. I also discovered along the way that my existing file server VM was not setup to be able to handle PCIe passthrough. The default Machine Type that Proxmox suggests - `i440fx` - doesn't support it. So that needs changing to `q35`, also it has to be setup with UEFI. Well that's more of a problem as my VM is using BIOS. A this point it became easier to spin up a new VM with the correct setting and re-do the configuration of it. Other options to be aware of: Memory ballooning needs to be off and the CPU set to `host`. At this point I haven't installed the new HBA yet. Install a fresh version of Ubuntu Server 24.04 LTS and it all feels very snappy. Makes me wonder about my old VM, I think it might be an original install of 16.04 that I have upgraded every 2 years and was migrated over from my old ESXi R710 server a few years ago. Fair play to it, I have had zero issues with it in all that time. Ubuntu server is just absolutely rock solid. Not too much to configure on this VM - SSH, NFS exports, etckeeper, a couple of users and groups. I use etckeeper, so I have a record of the `/etc` of all my VMs that I can look back to, which has come in handy on several occasions. Now almost ready to swap the HBA after I run the final `restic` backup, which only takes 5 mins (I bloody love restic!). Also update the fstabs of VMS so they don't try mount the file server and stop a few from auto starting on boot, just temporarily. Turn the server off and get inside to swap the cards over. Quite straightforward other than the SAS ports being in a worse place for ease of access. Power back on. Amazingly it all came up - last time I tried to add an NVME on a PCIe card it killed the system. Set the PICe passthrough for the HBA on the new VM. Luckily the new HBA is on it's own IOMMU group (maybe that's somehow tied to the PCIE slot?) Make sure to tick the `PCIE` flag so it's not treated as PCI - remember PCI cards?! Now the real deal. Boot the VM, SSH in. `fdisk -l` lists all the disks attached. Well this is good news! Try create the zpool `zpool create storage raidz2 /dev/disk/by-id/XXXXXXX ......` Hmmm, can't do that as it knows it's a raid disk and mdadm has tried to mount it so they're in use. Quite a bit of investigation later with a combination of `wipefs -af /dev/sdX`, `umount /dev/md126`, `mdadm --stop /dev/sd126` and `shutdown -r now` and the RAIDynes of the disks is gone and I can re-run the `zpool` command. It that worked! Note: I forgot to add in `ashift=12` to my zpool creation command, I have only just noticed this as I write, but thankfully it was clever enough to pick the correct one. ``` $ zpool get all | grep ashift storage ashift 0 default ``` Hmmm, what's `0`? ``` $ sudo zdb -l /dev/sdb1 | grep ashift ashift: 12 ``` Phew!!! I also have passed through the USB backup disks I have, mounted them and started the restic backup restore. So far it's 1.503TB in after precisely 5 hours, which seems OK. I'll setup monthly scrub cron jobs tomorrow. P.S. I tried TrueNAS out in a VM with no disks to see what it's all about. It looks very nice, but I don't need any of that fancyness. I've always managed my VM's over SSH which I've felt is lighter weight and less open to attack. Thanks for stopping by my Ted Talk.

I want to set up ufw on my server, but something wrong here. Even when I trying to block 22 port ssh still working and nothing changing. I have ufw enabled, but nothing works.

I would like to scale back my hosting costs and migrate one (or a few) sites over to a machine that I host at home. The bandwidth is more than enough to cover the traffic of these small sites. The simplicity of IPv6 has attracted me to the idea of exposing that server over IPv6 for hosting, while my daily machines remain on the IPv4 side of the stack. I don't care if this means that the sites are reachable by fewer visitors, as the traffic has never been huge. Am I going down a rabbit hole that I will later regret? How would you do this *right*?

Vague title I know, but I'm enough of a beginner at this to not really know what I need to ask! I would like to rent a server, that allows me to spin up different services, including things like Windows to use as a remote desktop. Ideally, I would then be able to just migrate this whole setup to my home server. I thought it would be as easy as renting a scalable VPS, but apparently if you run something like Proxmox on those, you'll get terrible performance? My understanding is that I'd need to rent a bare metal server, but then my 'scalability' will suffer- I can't just wind up and down the specs as needed, correct? My user case: For the next several months, I'm on the road, without a proper computer. I may have some work doing some CAD drafting, hence Windows. I'd also like to have some containers to run some dev tools, databases, web hosting. I'd also like to use the same service to start building my future home server environment- nextcloud, *arr, etc. Once I'm back home, I'd like to easily migrate this setup to a local machine, then continue to use the server as my own cloud and public entry point. And further down the line, hosting a gaming server for friends. In terms of location, Sydney would be great. Will a VPS do this? Or do I need bare metal? Is there a single service that will allow me to do both, with one billing? Or am I doing a Dunning-Kruger? Thanks in advance for your hints.

I'd like to host my own container images centrally in my network so that I can both cache the images (if dockerhub or similar goes down) but also host my own images that I don't want public. Anyone doing this?

I'm looking for a selfhosted app for inventory management **with SSO support**. I already looked ad [Grocy](https://github.com/grocy/grocy) and [Homebox](https://hay-kot.github.io/homebox/). Grocy seems to be a really good app for the purpose. It's overloaded with features but fortunately you can deactivate the ones you don't need. The only thing missing is SSO support via OIDC or SAML. Are there any alternatives that do support SSO?

Hello, Small question to this incredible community. Does anybody have a good suggestion about a link manager with plug-ins for different browsers? If it could also support Samsung browser would be an incredible plus. In my use case I intent to (easily) save some links for reading later and the integration with a mobile browser is fundamental to make the things easy. Thanks in advance!!