LocalLLaMA

Trying something new, going to pin this thread as a place for beginners to ask what may or may not be stupid questions, to encourage both the asking and answering. Depending on activity level I'll either make a new one once in awhile or I'll just leave this one up forever to be a place to learn and ask. When asking a question, try to make it clear what your current knowledge level is and where you may have gaps, should help people provide more useful concise answers!

https://huggingface.co/collections/Qwen/qwen25-66e81a666513e518adb90d9e Qwen 2.5 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B just came out, with some variants in some sizes just for math or coding, and base models too. All Apache licensed, all 128K context, and the 128K seems legit (unlike Mistral). And it's pretty sick, with a tokenizer that's more efficient than Mistral's or Cohere's and benchmark scores even better than llama 3.1 or mistral in similar sizes, especially with newer metrics like MMLU-Pro and GPQA. I am running 34B locally, and it seems super smart! As long as the benchmarks aren't straight up lies/trained, this is massive, and just made a whole bunch of models obsolete. Get usable quants here: GGUF: https://huggingface.co/bartowski?search_models=qwen2.5 EXL2: https://huggingface.co/models?sort=modified&search=exl2+qwen2.5

Mistral Small 22B just dropped today and I am blown away by how good it is. I was already impressed with Mistral NeMo 12B's abilities, so I didn't know how much better a 22B could be. It passes really tough obscure trivia that NeMo couldn't, and its reasoning abilities are even more refined. With Mistral Small I have finally reached the plateu of what my hardware can handle for my personal usecase. I need my AI to be able to at least generate around my base reading speed. The lowest I can tolerate is 1.5~T/s lower than that is unacceptable. I really doubted that a 22B could even run on my measly Nvidia GTX 1070 8G VRRAM card and 16GB DDR4 RAM. Nemo ran at about 5.5t/s on this system, so how would Small do? Mistral Small Q4_KM runs at 2.5T/s with 28 layers offloaded onto VRAM. As context increases that number goes to 1.7T/s. It is absolutely usable for real time conversation needs. I would like the token speed to be faster sure, and have considered going with the lowest Q4 recommended to help balance the speed a little. However, I am very happy just to have it running and actually usable in real time. Its crazy to me that such a seemingly advanced model fits on my modest hardware. Im a little sad now though, since this is as far as I think I can go in the AI self hosting frontier without investing in a beefier card. Do I need a bigger smarter model than Mistral Small 22B? No. Hell, NeMo was serving me just fine. But now I want to know just how smart the biggest models get. I caught the AI Acquisition Syndrome!

I just found [https://www.arliai.com/](https://www.arliai.com/) who offer LLM inference for quite cheap. Without rate-limits and unlimited token generation. No-logging policy and they have an OpenAI compatible API. I've been using runpod.io previously but that's a whole different service as they sell compute and the customers have to build their own Docker images and run them in their cloud, by the hour/second. Should I switch to ArliAI? Does anyone have some experience with them? Or can recommend another nice inference service? I still refuse to pay $1.000 for a GPU and then also pay for electricity when I can use some $5/month cloud service and it'd last me 16 years before I reach the price of buying a decent GPU... Edit: Saw their $5 tier only includes models up to 12B parameters, so I'm not sure anymore. For larger models I'd need to pay close to what other inference services cost.

I'm currently using SuperNormal to taking meeting minutes for all of my Teams, Google Meet, and Zoom conference calls. Is there a workflow for doing this locally with Whisper and some other tools? I haven't found one yet.

Only recently did I discover the text-to-music AI companies (udio.com, suno.com) and I was surprised about how good the results are. Both are under lawsuit from RIAA. I am curious if there are any local ones I can experiment with or train myself. I know there is facebook/musicgen-large on HuggingFace. That model is over 1 year old and there might be others by now. Also, based on the card I get the feeling that model is not going to be good at doing specific song lyrics (maybe the lyrics just were absent from the training data?). I am most interested in trying my hand at writing songs and fine-tuning a model on specific types of music to get the sounds I am looking for.

mistral.ai

mistral.ai

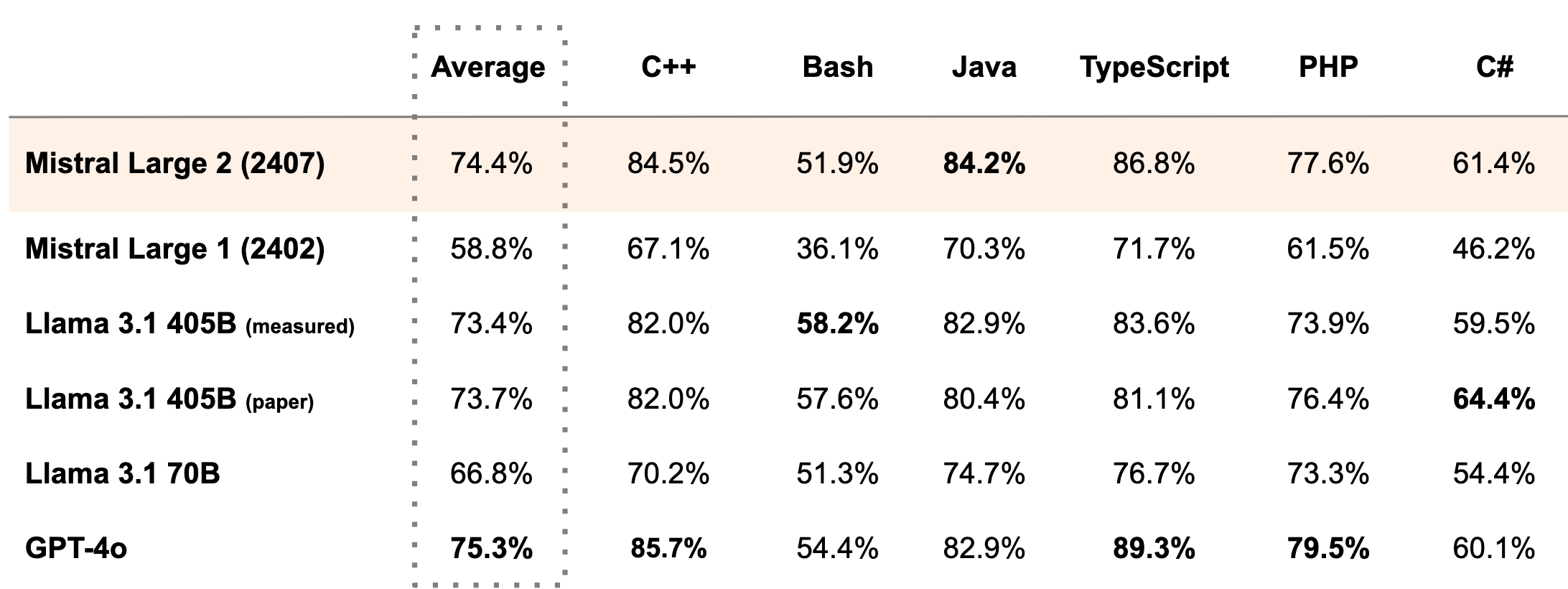

Another day, another model. Just one day after Meta released their new frontier models, Mistral AI surprised us with a new model, Mistral Large 2. It's quite a big one with 123B parameters, so I'm not sure if I would be able to run it at all. However, based on their numbers, it seems to come close to GPT-4o. They claim to be on par with GPT-4o, Claude 3 Opus, and the fresh Llama 3 405B regarding coding related tasks.  It's multilingual, and from what they said in their blog post, it was trained on a large coding data set as well covering 80+ programming languages. They also claim that it is *"trained to acknowledge when it cannot find solutions or does not have sufficient information to provide a confident answer"* On the licensing side, it's free for research and non-commercial applications, but you have to pay them for commercial use.

ai.meta.com

ai.meta.com

Meta has released llama 3.1. It seems to be a significant improvement to an already quite good model. It is now multilingual, has a 128k context window, has some sort of tool chaining support and, overall, performs better on benchmarks than its predecessor. With this new version, they also released their 405B parameter version, along with the updated 70B and 8B versions. I've been using the 3.0 version and was already satisfied, so I'm excited to try this.

Hello y'all, i was using [this guide](https://medium.com/@vpodolian/how-to-run-llama-on-windows-11-4e8027665a67) to try and set up llama again on my machine, i was sure that i was following the instructions to the letter but when i get to the part where i need to run setup_cuda.py install i get this error ` File "C:\Users\Mike\miniconda3\Lib\site-packages\torch\utils\cpp_extension.py", line 2419, in _join_cuda_home raise OSError('CUDA_HOME environment variable is not set. ' OSError: CUDA_HOME environment variable is not set. Please set it to your CUDA install root. (base) PS C:\Users\Mike\text-generation-webui\repositories\GPTQ-for-LLaMa>` i'm not a huge coder yet so i tried to use setx to set CUDA_HOME to a few different places but each time doing `echo %CUDA_HOME` doesn't come up with the address so i assume it failed, and i still can't run setup_cuda.py Anyone have any idea what i'm doing wrong?

fuglede.github.io

fuglede.github.io

You type "Once upon a time!!!!!!!!!!" and those exclamation marks are rendered to show the LLM generated text, using a tiny 30MB model via https://simonwillison.net/2024/Jun/23/llama-ttf/

Hello! I am looking for some expertise from you. I have a hobby project where Phi-3-vision fits perfectly. However, the PyTorch version is a little too big for my 8GB video card. I tried looking for a quantized model, but all I found is 4-bit. Unfortunately, this model works too poorly for me. So, for the first time, I came across the task of quantizing a model myself. I found some guides for Phi-3V quantization for ONNX. However, the only options are fp32(?), fp16, int4. Then, I found a nice tool for AutoGPTQ but couldn't make it work for the job yet. Does anybody know why there is no int8/int6 quantization for Phi-3-vision? Also, has anybody used AutoGPTQ for quantization of vision models?

arxiv.org

arxiv.org

"Alice has N brothers and she also has M sisters. How many sisters does Alice’s brother have?" The problem has a light quiz style and is arguably no challenge for most adult humans and probably to some children. The scientists posed varying versions of this simple problem to various State-Of-the-Art LLMs that claim strong reasoning capabilities. (GPT-3.5/4/4o , Claude 3 Opus, Gemini, Llama 2/3, Mistral and Mixtral, including very recent Dbrx and Command R+) They observed a strong collapse of reasoning and inability to answer the simple question as formulated above across most of the tested models, despite claimed strong reasoning capabilities. Notable exceptions are Claude 3 Opus and GPT-4 that occasionally manage to provide correct responses. This breakdown can be considered to be dramatic not only because it happens on such a seemingly simple problem, but also because models tend to express strong overconfidence in reporting their wrong solutions as correct, while often providing confabulations to additionally explain the provided final answer, mimicking reasoning-like tone but containing nonsensical arguments as backup for the equally nonsensical, wrong final answers. * [Arxiv, paper submitted on 4 Jun 2024](https://arxiv.org/abs/2406.02061) * [PDF](https://arxiv.org/pdf/2406.02061) * [heise online article (German)](https://www.heise.de/news/Reasoning-Fail-Gaengige-LLMs-scheitern-an-kinderleichter-Aufgabe-9755034.html)

Remember 2-3 years ago when OpenAI had a website called transformer that would complete a sentence to write a bunch of text. Most of it was incoherent but I think it is important for historic and humor purposes.

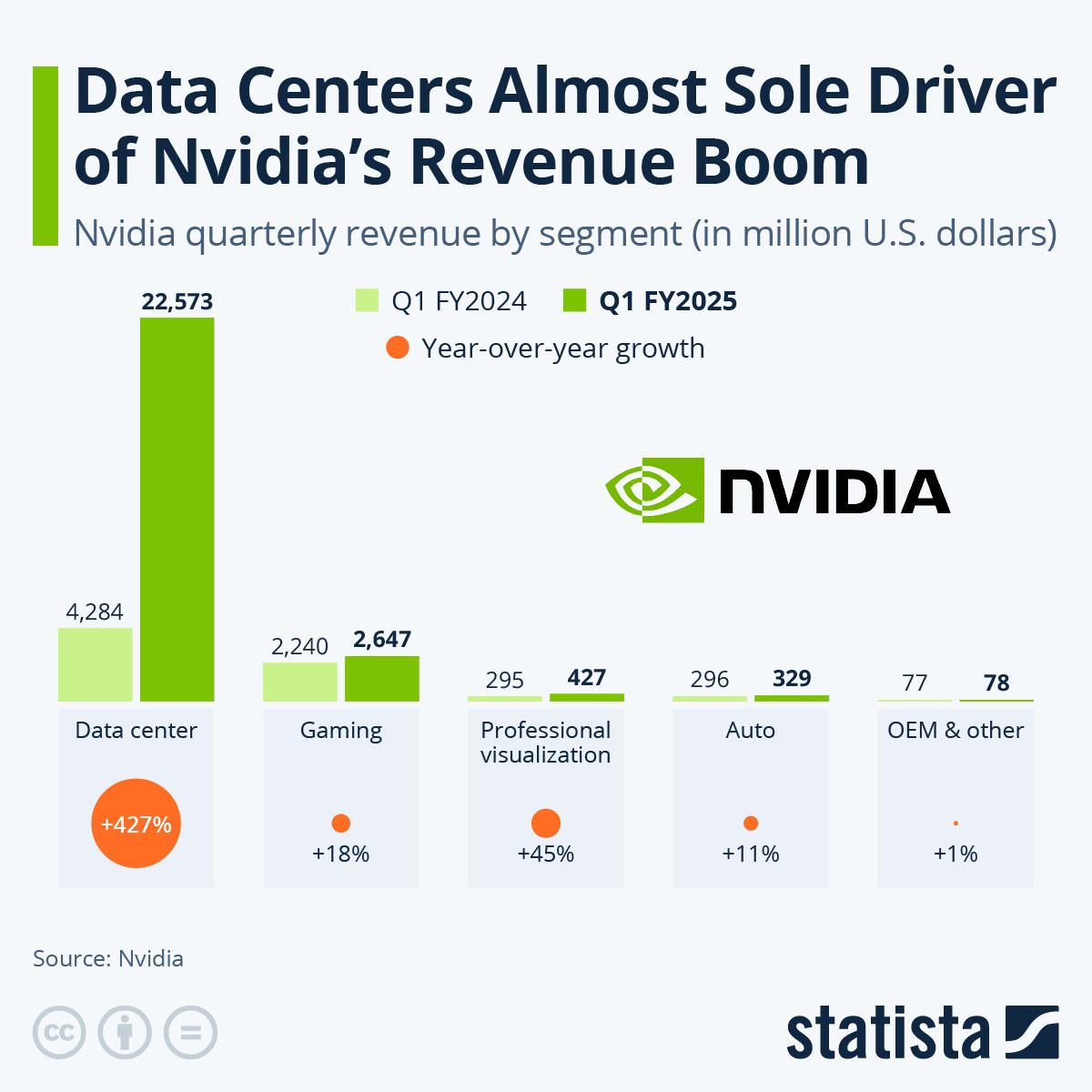

[](https://discuss.tchncs.de/pictrs/image/9ed3d21c-4511-40ef-8a41-3c6c313c2d02.jpeg) So here's the way I see it; with Data Center profits being the way they are, I don't think Nvidia's going to do us any favors with GPU pricing next generation. And apparently, the new rule is Nvidia cards exist to bring AMD prices up. So here's my plan. Starting with my current system; ``` OS: Linux Mint 21.2 x86_64 CPU: AMD Ryzen 7 5700G with Radeon Graphics (16) @ 4.673GHz GPU: NVIDIA GeForce RTX 3060 Lite Hash Rate GPU: AMD ATI 0b:00.0 Cezanne GPU: NVIDIA GeForce GTX 1080 Ti Memory: 4646MiB / 31374MiB ``` I think I'm better off just buying another 3060 or maybe 4060ti/16. To be nitpicky, I can get 3 3060s for the price of 2 4060tis and get more VRAM plus wider memory bus. The 4060ti is probably better in the long run, it's just so damn expensive for what you're actually getting. The 3060 really is the working man's compute card. It needs to be on an all-time-greats list. My limitations are that I don't have room for full-length cards (a 1080ti, at 267mm, just barely fits), also I don't want the cursed power connector. Also, I don't really want to buy used because I've lost all faith in humanity and trust in my fellow man, but I realize that's more of a "me" problem. Plus, I'm sure that used P40s and P100s are a great value as far as VRAM goes, but how long are they going to last? I've been using GPGPU since the early days of LuxRender OpenCL and Daz Studio Iray, so I know that sinking feeling when older CUDA versions get dropped from support and my GPU becomes a paperweight. Maxwell is already deprecated, so Pascal's days are definitely numbered. On the CPU side, I'm upgrading to whatever they announce for Ryzen 9000 and a ton of RAM. Hopefully they have some models without NPUs, I don't think I'll need them. As far as what I'm running, it's Ollama and Oobabooga, mostly models 32Gb and lower. My goal is to run Mixtral 8x22b but I'll probably have to run it at a lower quant, maybe one of the 40 or 50Gb versions. My budget: Less than Threadripper level. Thanks for listening to my insane ramblings. Any thoughts?

It actually isn't half bad depending on the model. It will not be able to help you with side streets but you can ask for the best route from Texas to Alabama or similar. The results may surprise you.

Current situation: I've got a desktop with 16 GB of DDR4 RAM, a 1st gen Ryzen CPU from 2017, and an AMD RX 6800 XT GPU with 16 GB VRAM. I can 7 - 13b models extremely quickly using ollama with ROCm (19+ tokens/sec). I can run Beyonder 4x7b Q6 at around 3 tokens/second. I want to get to a point where I can run Mixtral 8x7b at Q4 quant at an acceptable token speed (5+/sec). I can run Mixtral Q3 quant at about 2 to 3 tokens per second. Q4 takes an hour to load, and assuming I don't run out of memory, it also runs at about 2 tokens per second. What's the easiest/cheapest way to get my system to be able to run the higher quants of Mixtral effectively? I know that I need more RAM Another 16 GB should help. Should I upgrade the CPU? As an aside, I also have an older Nvidia GTX 970 lying around that I might be able to stick in the machine. Not sure if ollama can split across different brand GPUs yet, but I know this capability is in llama.cpp now. Thanks for any pointers!

Recently OpenAI released GPT-4o Video I found explaining it: https://youtu.be/gy6qZqHz0EI Its a little creepy sometimes but the voice inflection is kind of wild. What I the to be alive.

I am planning my first ai-lab setup, and was wondering how many tokens different AI-workflows/agent network eat up on an average day. For instance talking to an AI all day, have devlin running 24/7 or whatever local agent workflow is running. Oc model inference speed and type of workflow influences most of these networks, so perhaps it's easier to define number of token pr project/result ? So I were curious about what typical AI-workflow lemmies here run, and how many tokens that roughly implies on average, or on a project level scale ? Atmo I don't even dare to guess. Thanks..

Hartford is credited as creator of Dolphin-Mistral, Dolphin-Mixtral and lots of other stuff. He's done a huge amount of work on [uncensored models](https://erichartford.com/uncensored-models).

From Simon Willison: "Mistral [tweet a link](https://twitter.com/MistralAI/status/1777869263778291896) to a 281GB magnet BitTorrent of **Mixtral 8x22B**—their latest openly licensed model release, significantly larger than their previous best open model Mixtral 8x7B. I’ve not seen anyone get this running yet but it’s likely to perform extremely well, given how good the original Mixtral was."

I've been using tie-fighter which hasn't been too bad with lorebooks in tavern.

Afaik most LLMs run purely on the GPU, dont they? So if I have an Nvidia Titan X with 12GB of RAM, could I plug this into my laptop and offload the load? I am using Fedora, so getting the NVIDIA drivers would be... fun and already probably a dealbreaker (wouldnt want to run proprietary drivers on my daily system). I know that using ExpressPort adapters people where able to use GPUs externally, and this is possible with thunderbolt too, isnt it? The question is, how well does this work? Or would using a small SOC to host a webserver for the interface and do all the computing on the GPU make more sense? I am curious about the difficulties here, ARM SOC and proprietary drivers? Laptop over USB-c (maybe not thunderbolt?) and a GPU just for the AI tasks...

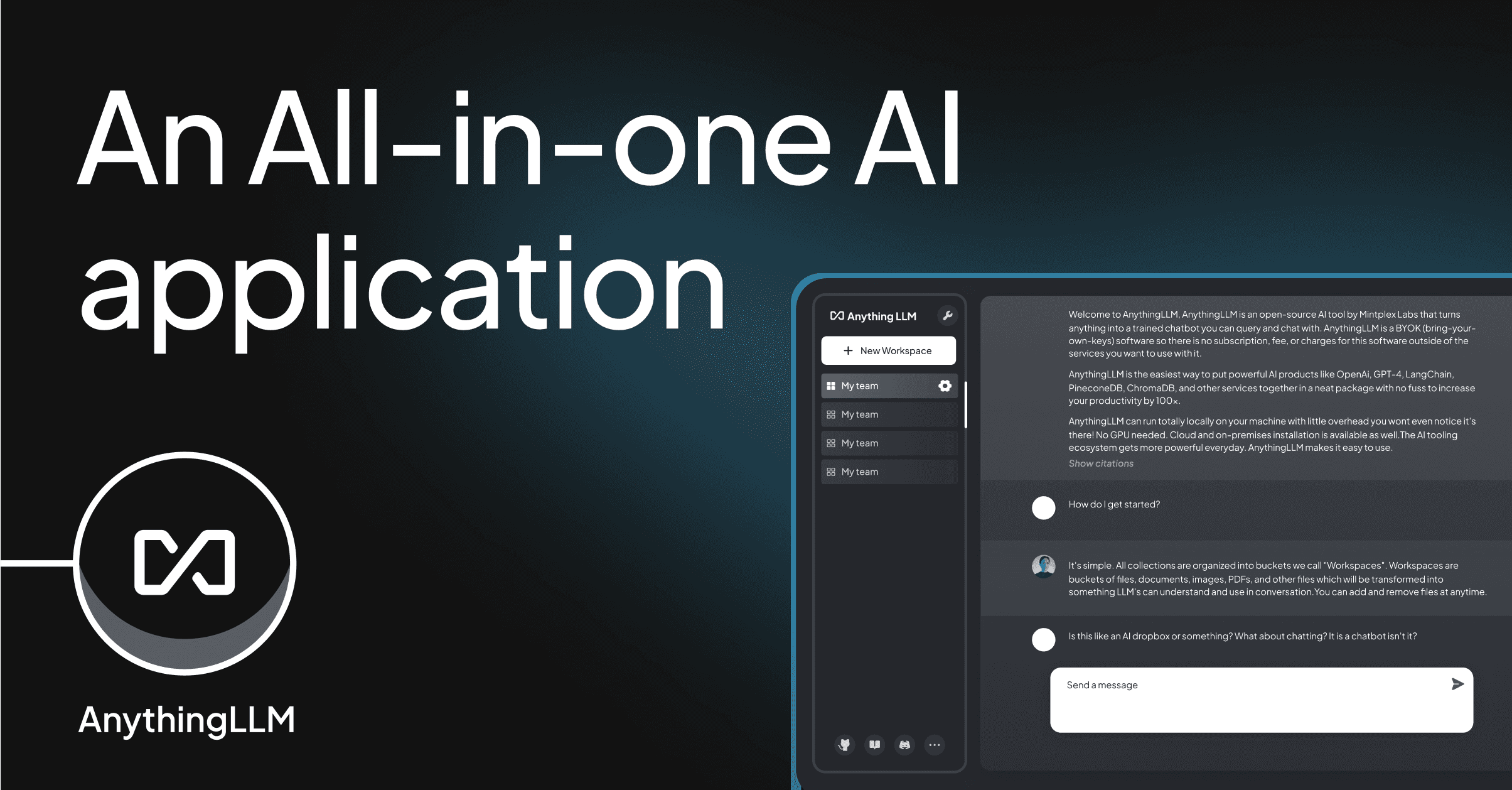

useanything.com

useanything.com

Linux package available like LM Studio

sakana.ai

sakana.ai

arXiv: https://arxiv.org/abs/2403.13187 \[cs.NE\]\ GitHub: https://github.com/SakanaAI/evolutionary-model-merge

huggingface.co

huggingface.co

arXiv: https://arxiv.org/abs/2403.03507 [cs.LG]

GitHub: https://github.com/mistralai-sf24/hackathon \ X: https://twitter.com/MistralAILabs/status/1771670765521281370 >New release: Mistral 7B v0.2 Base (Raw pretrained model used to train Mistral-7B-Instruct-v0.2)\ >🔸 https://models.mistralcdn.com/mistral-7b-v0-2/mistral-7B-v0.2.tar \ >🔸 32k context window\ >🔸 Rope Theta = 1e6\ >🔸 No sliding window\ >🔸 How to fine-tune:

ollama.com

ollama.com

But in all fairness, it's really llama.cpp that supports AMD. Now looking forward to the Vulkan support!

Excited to share my T-Ragx project! And here are some additional learnings for me that might be interesting to some: - vector databases aren't always the best option - Elasticsearch or custom retrieval methods might work even better in some cases - LoRA is incredibly powerful for in-task applications - The pace of the LLM scene is astonishing - `TowerInstruct` and `ALMA-R` translation LLMs launched while my project was underway - Above all, it was so fun! Please let me know what you think!

So you don't have to click the link, here's the full text including links: >Some of my favourite @huggingface models I've quantized in the last week (as always, original models are linked in my repo so you can check out any recent changes or documentation!): > >@shishirpatil_ gave us gorilla's openfunctions-v2, a great followup to their initial models: https://huggingface.co/bartowski/gorilla-openfunctions-v2-exl2 > >@fanqiwan released FuseLLM-VaRM, a fusion of 3 architectures and scales: https://huggingface.co/bartowski/FuseChat-7B-VaRM-exl2 > >@IBM used a new method called LAB (Large-scale Alignment for chatBots) for our first interesting 13B tune in awhile: https://huggingface.co/bartowski/labradorite-13b-exl2 > >@NeuralNovel released several, but I'm a sucker for DPO models, and this one uses their Neural-DPO dataset: https://huggingface.co/bartowski/Senzu-7B-v0.1-DPO-exl2 > >Locutusque, who has been making the Hercules dataset, released a preview of "Hyperion": https://huggingface.co/bartowski/hyperion-medium-preview-exl2 > >@AjinkyaBawase gave an update to his coding models with code-290k based on deepseek 6.7: https://huggingface.co/bartowski/Code-290k-6.7B-Instruct-exl2 > >@Weyaxi followed up on the success of Einstein v3 with, you guessed it, v4: https://huggingface.co/bartowski/Einstein-v4-7B-exl2 > >@WenhuChen with TIGER lab released StructLM in 3 sizes for structured knowledge grounding tasks: https://huggingface.co/bartowski/StructLM-7B-exl2 > >and that's just the highlights from this past week! If you'd like to see your model quantized and I haven't noticed it somehow, feel free to reach out :)